Organoid Intelligence, Quantum Computing & Theories of Consciousness

Exploring frontier technology & ethical dilemmas in our future consciousness

"If we were to lose the ability to be emotional, if we were to lose the ability to be angry, to be outraged, we would be robots."― Arundhati Roy

Consciousness remains one of the most contested and cross-disciplinary concepts in science, philosophy, and cultural traditions.

In the AI world, where our pursuit of AGI gives tech oligarchs and computer engineers god-like powers, consciousness is also a commodity which needs to be constantly redefined, realised, optimised and monetised.

1. Theories of Consciousness

2. Timeline of Consciousness Models in Tech

3. Man, Machine & Feeling

4. Synthetic Biological Intelligence (SBI) and Organoid Intelligence (OI)

5. Ethical Questions in Organoid Intelligence

6. Enter Quantum: Where It Could Help

7. Key Questions as we consider this frontier era.

What is consciousness, really?

Consciouness is a complex space.

Essentially, consciousness is your awareness of yourself and the world around you. This awareness is subjective and unique to you. If you can describe something you are experiencing in words, it is part of your consciousness.

Rather than a single clear definition, in the Western paradigm there at least ten distinct frameworks can be meaningfully tracked — spanning subjective experience, global information access, integrated information theory, and quantum coherence models.

Mapping these definitions reveals not only deep disciplinary divides, but also points of convergence across knowledge systems. These definitions emerge from fields as varied as cognitive neuroscience, philosophy of mind, AI, non-Western metaphysics, and indigenous relational worldviews.

Some treat consciousness as an emergent cognitive function, in which consciousness arises from complex brain activity. It’s a product of the brain, like heat from friction;

others view it as a fundamental, non-local field, in which consciousness exists independently of the brain. The brain may just access or channel it, like an antenna..

Each framework presents different attributes — from attention and memory, to embodiment, to spiritual or ecological awareness — shaping how we think about machine intelligence, ethical design, and the future of AI.

Theories of Consciousness

“Whoever told people that ‘mind’ means thoughts, opinions, ideas, and concepts? Mind means trees, fence posts, tiles and grasses.” —Dōgen

Enactive and embodied models in neuroscience increasingly align with Buddhist and Daoist views of cognition as relational and dynamic.

Quantum theories of consciousness echo longstanding Vedantic and Islamic philosophical ideas of non-duality and subtle intelligence.

Indigenous and animist cosmologies challenge brain-bound assumptions by treating consciousness as distributed across landscapes, materials, and kinship networks.

Four priests perform a yagna, a fire sacrifice, an old vedic ritual where offerings are made to the god of fire, Agni. Gouache painting by an Indian artist. Wellcome Collection. Source: Wellcome Collection. Date:[between 1800 and 1899?]

As emerging technologies like quantum computing and organoid intelligence disrupt classical boundaries of cognition, these intersecting models invite a more plural, relational, and post-anthropocentric understanding of what it means to think, feel, and know.

“People will do anything, no matter how absurd, in order to avoid facing their own souls. One does not become enlightened by imagining figures of light, but by making the darkness conscious.”

― Carl Jung, Psychology and Alchemy

As AI systems move toward greater autonomy — and quantum computing promises radically new forms of cognition — we’re facing old questions in new contexts:

What is consciousness? Who or what can have it? How should we design and govern systems that might begin to think, feel, or act?

Mainstream models of consciousness, from neuroscience and cognitive science, have shaped how we build artificial systems.

The Global Workspace Theory inspires attention-based models in AI. The Free Energy Principle guides the design of agentic, self-updating machines. And Integrated Information Theory has been used to argue whether an AI could ever be sentient.

But these Western frameworks are only part of the story. Across non-Western traditions, from Advaita Vedānta to Indigenous cosmologies, consciousness is not emergent or simulated — it is foundational, relational, and often non-human.

These traditions offer radically different ideas:

That mind and world co-arise, rather than being separate

That intelligence is ecological, not just computational

That consciousness might not require a brain at all

As we think about the futures of frontier technology, the pursuit of consciousness will be front and centre.

As definitions of consciousness expand beyond purely cognitive functions, there is great alignment around ideas of embodiment, relationality, and non-local cognition. This is especially relevant as emerging technologies such as quantum computing and organoid intelligence challenge the classical boundaries of cognition.

What does it mean to be? In the context of AI, this raises profound questions: What kind of entity is an AI system? Is it a subject, an object, or something else entirely? Can it have a self, or merely simulate one? — digital artist & sculptor Nick Hornby

Timeline of Consciousness Models

Western science moves from dualist metaphysics to computational and information-based models.

Non-Western traditions often treat consciousness as foundational, relational, and embodied, not emergent from physical matter.

The 21st century marks a convergence, where embodied, relational, and contemplative models are entering mainstream debates — especially in AI, mental health, and ecological thinking.

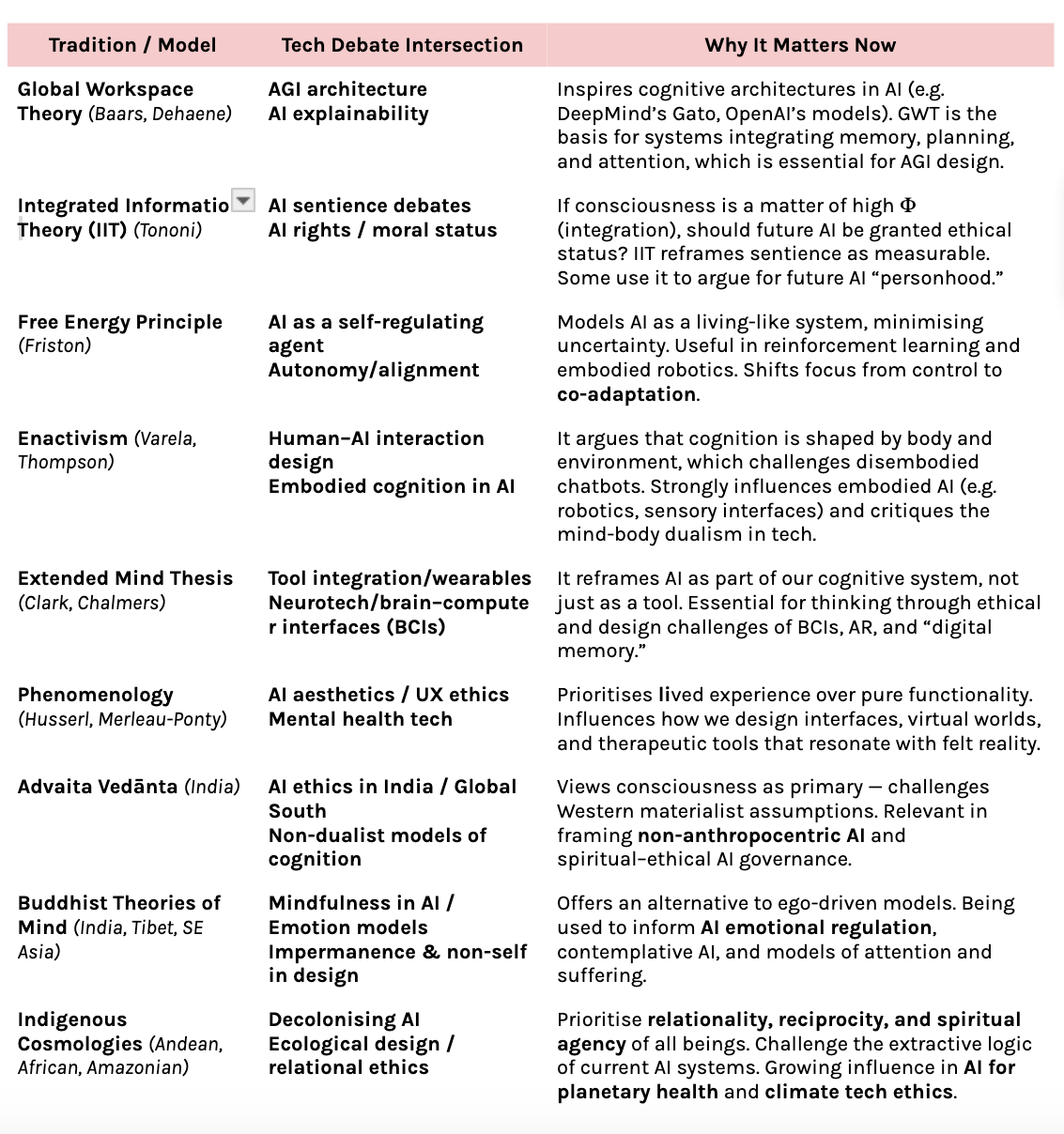

Consciousness Traditions × Tech Debates: Where They Intersect

Organoid Intelligence, the Brain & Consciousness

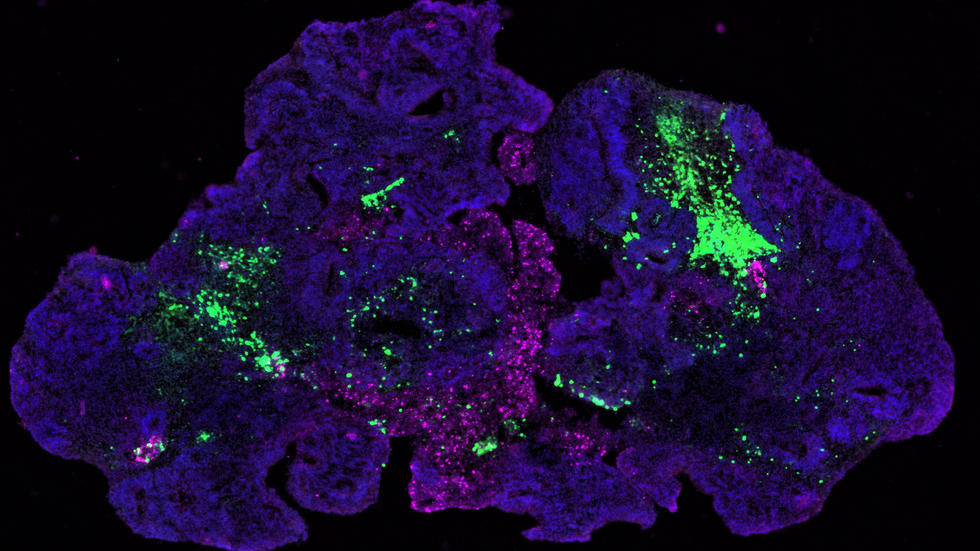

‘Organoid intelligence’ (OI) describes an emerging multidisciplinary field working to develop biological computing using 3D cultures of human brain cells (brain organoids) and brain-machine interface technologies.

An organoid is a three-dimensional tissue culture, grown in a lab, that mimics the structure and function of a specific organ in the body. They are essentially "mini-organs" developed from stem cells. So.. basically it sounds a bit gross but its now known as a “brain (or intelligence) in a dish.”

Harvard Stem Cell Institute: Organoids: A new window into disease, development and discovery November 7, 2017

Organoids are used to study organ development, disease, and for drug discovery. OI aims to harness the biological capabilities of brain organoids for biocomputing and synthetic intelligence by interfacing them with computer technology. This field holds promise for understanding brain development, learning, and memory, as well as for developing new treatments for neurological disorders.

Man, Machine & Feeling

“The thing is, I don't want my sadness to be othered from me just as I don't want my happiness to be othered. They're both mine. I made them, dammit.”― Ocean Vuong, On Earth We're Briefly Gorgeous

How can we ever know if an organoid—a few million neurons in a dish—is conscious?

Unlike animals or humans, these systems can’t communicate, behave recognisably, or express suffering in ways we understand.

And yet, some already exhibit spontaneous electrical activity akin to fetal brain development, raising alarms among neuroscientists and ethicists.

Researchers like Anil Seth argue that consciousness is likely rooted in brain-body-world interaction, suggesting that organoids, lacking all three, are unlikely to be conscious in any meaningful way. As a professor of cognitive and computational neuroscience at the University of Sussex, Seth explores how consciousness arises in biological organisms and how this understanding informs debates about artificial and synthetic cognition.

“I don’t think it can be ruled out that a cerebral organoid could achieve consciousness. I think it’s entirely possible that as organoids develop in complexity and similarity to human brains that they could have conscious experiences.” Dr. Anil Seth, 2020 Nature

He has pushed for the development of ethical frameworks to guide research in this area, given the uncertainties involved.

But others, especially those working in computational neuroscience, suggest that under the right structural and functional conditions, some degree of proto-consciousness may emerge, even in minimal systems.

There’s precedent in the way we treat non-human animals in research, where the capacity to feel pain—not the ability to speak—is often the key ethical threshold. Should a similar logic apply to neural systems that can adapt, show memory, or react in unpredictable ways…. ?

Are Brain Organoids Conscious? AI? Christof Koch on Consciousness vs. Intelligence in IIT [Clip 4] - Christof Koch explains why brain organoids are conscious, but AI is not, according to Integrated Information Theory (IIT). Dr. Koch is a contributor to IIT and studies consciousness at the Allen Institute for Brain Science, which he used to run.

Synthetic Biological Intelligence (SBI) and Organoid Intelligence (OI)

Synthetic Biological Intelligence (SBI) and Organoid Intelligence (OI)lead this transformation by merging living biological systems with computational frameworks, creating groundbreaking opportunities in medicine, research, and biocomputing.

Brain-inspired computing hardware aims to emulate the structure and working principles of the brain and could be used to address current limitations in artificial intelligence technologies. However, brain-inspired silicon chips are still limited in their ability to fully mimic brain function as most examples are built on digital electronic principles.

There are many use cases, but the most obvious is that the ability to use organoids as personalised disease models is a significant advancement. They provide a platform to test drug efficacy and toxicity in patient-specific contexts, moving us closer to truly individualised medicine.

Moreover, the potential to model rare diseases and genetic disorders, which often lack effective animal or other pathways underscores the societal and medical value of this research.

Ethical Questions in Organoid Intelligence

Organoid intelligence doesn't just challenge technical boundaries—it pushes directly into uncharted ethical terrain. These lab-grown neural systems are not symbolic models of cognition, like AI algorithms trained on language or vision tasks. They are made of human-derived brain cells. And as their complexity increases, so does the urgency of addressing what kind of moral and legal status they might deserve.

Remember my post on Bio-ethics, the Brain & AI ? Here’s a refresh. This is an urgent task for the worlds’ frontier tech regulators, currently still mired in basic ownership issues in AI.

1. Sentience and Consciousness

The central ethical dilemma is whether, and when, organoids could become sentient. Current brain organoids do not display anything close to human-level consciousness, but research is progressing fast. Some organoids show spontaneous electrical activity that mimics aspects of neural development in embryos. As these networks scale and begin to interact with environments—via sensors, inputs, or AI interfaces—it becomes harder to rule out the emergence of basic forms of awareness, memory, or even distress.

What responsibility do we have if these systems begin to exhibit signs of learning or self-organisation beyond what was anticipated? The very possibility demands a precautionary approach.

2. Consent and Origin

These systems are derived from human stem cells, often donated through medical procedures. But donors rarely imagine that their biological material could be used to build thinking machines. Should they be re-consented for such use? Should future research require more robust ethical review and informed consent protocols, especially as organoid use becomes more advanced?

Moreover, if organoids do develop sentience, the question shifts: not only must we consider the rights of the donors—but potentially the rights of the organoid itself.

3. Creation of Exploitable Cognition

Organoid intelligence opens up the prospect of creating systems that think—but do not have a voice, identity, or legal standing. In such a context, they could be uniquely vulnerable to exploitation. Used as computing substrates, controlled via AI interfaces, and potentially exposed to pain-like stimuli in research or training—they may never be able to express suffering, but that does not mean it doesn't exist.

This raises parallels to animal research ethics, but with a crucial difference: these are human-derived, cognitive systems, built specifically for intelligence.

4. Dual-Use and Misuse

As with all powerful technologies, organoid intelligence carries the risk of dual-use. While some researchers focus on medical applications—such as disease modelling or drug discovery—others may see value in military, surveillance, or control systems. The idea of developing sentient wetware that can be trained to optimise specific tasks creates profound concerns about autonomy, dignity, and abuse.

5. Regulatory Vacuum

OI is not fully covered by biomedical ethics frameworks (which often focus on whole organisms or patients), nor by AI ethics (which typically address non-biological systems). There is little to no established policy for entities that are not alive in the legal sense, but may someday think, learn, or feel.

This lack of legal and ethical infrastructure makes OI both a tempting and dangerous space—one that could accelerate faster than our ability to govern it.

The Brain is still way better…

[The brain] is the most complex piece of active matter in the known universe. — Christof Koch, Allen Institute for Brain Science

Why Brain-Inspired AI Hits a Wall with Classical Hardware - current neuromorphic or brain-inspired hardware (like IBM’s TrueNorth or Intel’s Loihi) is trying to replicate aspects of brain function, especially the parallelism and efficiency of neurons and synapses — but “AI Is Nothing Like a Brain, and That’s OK” How.

1. Digital Logic: Mimicking the brain — but only on the surface

To reach the complexity of even one biological neuron, a modern deep neural network requires between five and eight layers of nodes. But expanding artificial neural networks to more than two layers took decades. Even if the architecture can completely mimic the brain, the underlying processing is binary and electric voltage-based.

What it means:

Brain-inspired chips try to copy how the brain is wired — using artificial neurons and synapses — but the way they actually process information is still based on classical digital computing: 1s and 0s (on/off), using voltages like traditional computers.Why it’s a problem:

The brain doesn’t work in binary. It uses analogue signals, chemical gradients, and stochastic (random) processes. So these chips may be imitating the brain’s form and shape, but are unable to replicate its deeper functional complexity or fluidity.

2. Scaling Limits: Tiny chips, big physics problems

As chips get smaller, we hit fundamental thermal and quantum noise challenges.

What it means:

In silicon chips, engineers try to make components (like transistors) smaller to increase performance. But at the nano-scale, weird things start happening: heat builds up, and quantum effects (like tunnelling) introduce noise and unpredictability.Why it’s a problem:

You can’t keep shrinking things forever. Eventually, physics itself stops cooperating. That limits how far we can push performance using today’s materials and methods.

3. Energy Inefficiency: Brains are way more power-efficient

While better than GPUs, these chips still can’t touch the brain’s “efficiency” as it operates at the equivalent of about 20W vs. the AI GPU’s many kilowatts.

What it means:

A human brain uses about 20 watts of power — the same as a dim lightbulb. In contrast, training AI models or running high-performance chips like GPUs can use thousands of watts (kilowatts).Why it’s a problem:

AI hardware is still far more energy-hungry than biological systems. That’s a big deal when scaling AI globally — especially when thinking about sustainability, edge computing, or brain-inspired efficiency.

Enter Quantum: Where It Could Help

As primer, see my post on Quantum, Art & Magic The myths and truths in quantum mechanics, science, & spirit.

As quantum computing reshapes what machines can do — processing probabilities, entanglements, and non-local interactions — it brings us closer to these ancient ideas.

In quantum mechanics, reality is not fixed, linear, or local. It is relational, indeterminate, and context-sensitive — much like the lived, embodied, and spiritual understandings of consciousness across non-Western systems.

This convergence is more than philosophical. It’s practical. As we design AI, brain–machine interfaces, and post-silicon cognition, we need models that go beyond the limits of classical thought.

Quantum systems might not directly simulate the brain in a literal biological sense, but they could:

1. Model Emergent Cognitive Phenomena

Quantum systems natively handle superposition, entanglement, and uncertainty — all of which may better reflect the dynamical behaviours found in organoids. While not directly simulating neurons, quantum processors could serve as co-processors capable of interpreting noisy biological signals, modelling emergent brain-like states, or even enabling new hybrid AI systems where biology and quantum algorithms interact.

Projects like Cortical Labs’ DishBrain [Kagan et al., Neuron, 2022] demonstrate that living neural systems can learn — but understanding and scaling them will require new computational paradigms. As researchers like Penrose, Hameroff, and Lloyd have proposed, quantum models may offer more natural frameworks for cognition and complexity than classical computing can provide.

2. Quantum computers are natively good at simulating complex, high-dimensional, non-linear systems — the kind found in cortical networks or consciousness models.

This could lead to new types of AI architectures based on emergence, not just logic.

Emergent architectures aim to mimic how intelligence evolves in nature—through complexity, not instructions.

Chess AI (logic-based) vs.

Large Language Models or swarm robotics (emergent behaviours).

2. Enable Quantum Neural Networks (QNNs)

QNNs can, in theory, operate exponentially faster for multiple important tasks like optimisation, pattern recognition, and associative memory — some of the key functions of the brain.

3. Break Out of Symbolic vs. Subsymbolic Divide

Today’s models toggle between symbolic (rule-based) and subsymbolic (deep learning) systems. Quantum could allow hybrid processing that reflects how humans switch between intuition and logic more fluidly. This is very interesting and highly debated as pseudocience, and I think I’ll do a separate post on intuition later.

4. Hardware Parallelism at a New Scale

Quantum systems inherently process superpositions — enabling massive parallelism not achievable through classical neuromorphic circuits. This could mimic not just structural aspects of the brain but its dynamical states too.

5. Quantum Chips Could Be More Relevant Than GPUs for Organoid Intelligence

As organoid intelligence (OI) — the use of living brain-like tissue for computation — begins to move from experiment to emerging field, we face a key question: what kind of hardware is best suited to interface with, interpret, and augment these biological systems? ie. The brain.

Today’s experiments rely heavily on GPUs, which are excellent at processing large datasets and training deep learning models.

They also support real-time analysis of organoid activity, such as neural spike data. But GPUs, like all classical chips, are grounded in digital logic: binary, deterministic, and energy-intensive. That logic is increasingly mismatched with the nonlinear, adaptive, and probabilistic nature of living neural tissue.

Importantly, quantum chips also promise radical energy efficiency compared to GPUs, a critical factor as OI scales. The human brain runs on ~20W; by contrast, training large AI models can consume megawatts. If quantum processors can be stabilised and miniaturised (e.g. through photonic or topological designs), they could outperform GPUs not just in capability but sustainability.

5. Interface with the Brain More “Naturally”

Some researchers suggest quantum-inspired devices might interface more naturally with biological systems — perhaps even enabling quantum biosensing or entangled feedback loops for BCI (brain-computer interfaces).

Please don’t freak out, I did. This is all very weird for regular-brain people, and there’s research to say that quantum computing “behaviour” could be super-imposed back onto our wet, breathing brains. I don’t understand this at all, so please do read here.

There’s still no proof that quantum effects are needed to explain consciousness or cognition — but many physicists, like Penrose and Hameroff, argue it's plausible.

Regardless of whether you believe in “quantum consciousness,” building brain-inspired AI on quantum platforms could unlock architectures that don’t mimic neurons, but emulate cognition more deeply.

UCL Quantum Science and Technology Institute

Key Questions as we consider this frontier era.

What counts as consciousness in this context?

Are we talking about sentience (ability to feel), awareness (of self or surroundings), or just complex neural activity?

Can consciousness emerge in simplified, miniature systems with no sensory input or body?

What are the scientific indicators (if any)?

Spontaneous electrical activity (EEG-like waves)

Ability to learn or adapt to stimuli (e.g., Pong experiments)

Functional connectivity or memory-like behaviour

Does consciousness require embodiment?

Some argue a brain alone, without a body or environment, cannot be conscious.

Others suggest that consciousness is a gradient, not binary—and that even partial systems may experience something.

Ethical thresholds:

Should we act on the possibility of consciousness before we have proof?

What moral weight do we assign to these borderline or ambiguous cases?